Introduction

In today’s data-informed learning ecosystem, training is no longer judged solely by attendance or satisfaction forms. Trainers must adopt a multi-dimensional, evidence-based approach to evaluating course effectiveness—before, during, and after delivery—to demonstrate real impact, ensure continuous improvement, and align with business outcomes (Kirkpatrick & Kirkpatrick, 2016; CIPD, 2023).

1. Why Evaluate Training?

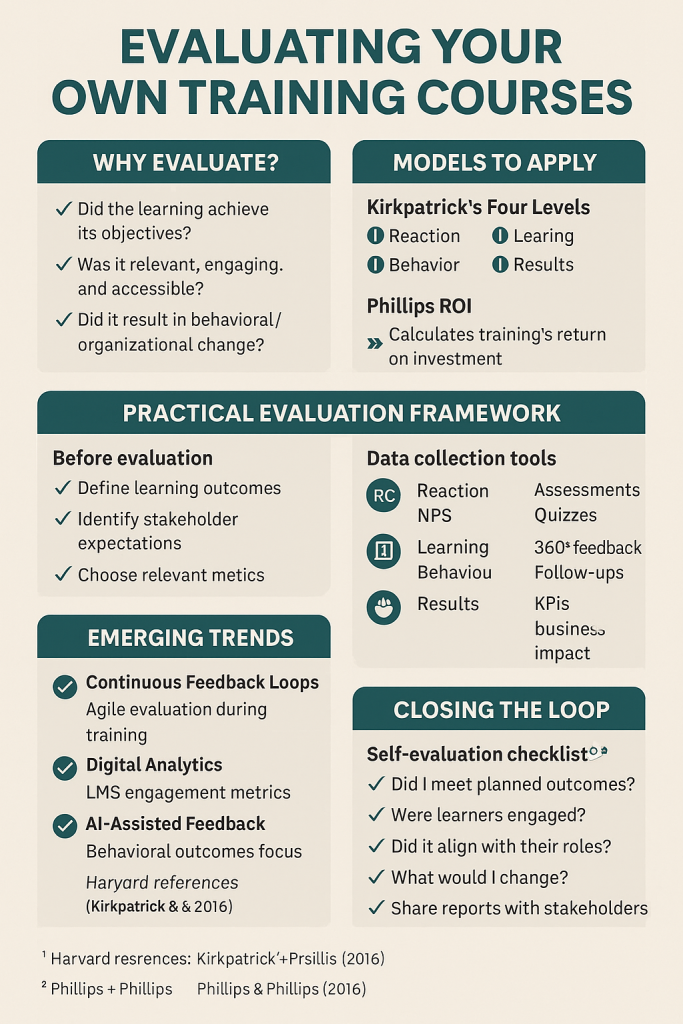

Training evaluation answers three core questions:

Did the learning achieve its objectives?

Was it relevant, engaging, and accessible?

Did it result in behavioural or organisational change?

Regular evaluation enables trainers to:

Continuously improve delivery.

Demonstrate return on expectations (ROE).

Align training with performance metrics and strategic goals (Phillips & Phillips, 2016).

2. Foundational Evaluation Models

✅ Kirkpatrick’s Four Levels (Kirkpatrick & Kirkpatrick, 2016)

Reaction – Did learners enjoy the training?

Learning – What knowledge, skills or attitudes changed?

Behaviour – Are learners applying the training on the job?

Results – What organisational value was created?

Practical Tip: Use a combination of post-session surveys, skills assessments, and follow-up interviews to capture data across levels.

📊 Phillips ROI Methodology

Adds a fifth level to Kirkpatrick—Return on Investment (ROI)—by comparing training costs to financial gains (Phillips & Phillips, 2016).

Example: Track time savings, productivity gains, or reduced errors post-training.

3. Practical Evaluation Framework

A. Pre-Evaluation Planning

Define learning outcomes clearly (SMART).

Identify stakeholders’ expectations.

Choose relevant metrics (e.g., behavioural change, quality scores, satisfaction ratings).

Use logic models or a theory of change to map how your training is supposed to create impact (Moore et al., 2015).

B. Data Collection Tools

Evaluation Level Tool Suggestions

Reaction Feedback forms, mood boards, Net Promoter Score (NPS)

Learning Pre- and post-assessments, knowledge checks, quizzes

Behaviour Observations, 360° feedback, follow-up coaching sessions

Results KPIs, performance dashboards, project outcomes

ROI Cost-benefit analysis, business impact metrics

Tip: Digital platforms (e.g., Microsoft Forms, Google Classroom, Kahoot, Miro) allow for real-time data capture.

4. Emerging Trends in Training Evaluation

🔁 Continuous Feedback Loops

Agile evaluation practices involve ongoing feedback during delivery—not just after.

Use live polling, chat reactions, and breakout reflections.

Adapt sessions in real-time based on learner input (Salas et al., 2012).

📱 Digital Analytics

Learning management systems (LMS) now offer learning analytics:

Track engagement (clicks, views, participation).

Monitor time spent on tasks or e-learning modules (Chatti et al., 2012).

🤖 AI-Assisted Feedback

Platforms like ChatGPT and other AI tools can automate survey analysis or offer insights on learner sentiment and understanding trends.

🎯 Behavioural Outcomes Focus

Shift focus from “learner satisfaction” to actual behavioural change and transfer of learning into practice (Brinkerhoff, 2006).

5. Practical Steps for Trainers

🛠 Self-Evaluation Checklist

Did I meet all the planned learning outcomes?

Were all learners actively engaged and included?

Did my content align with learners’ real-world roles?

What feedback did I receive (formal and informal)?

What would I do differently next time?

📚 Use Reflective Practice

Adopt tools like Gibbs’ Reflective Cycle or Brookfield’s Four Lenses to reflect on your role, materials, environment, and learner outcomes (Brookfield, 2017).

Consider peer observation or video review to gather insights on your facilitation style.

6. Closing the Loop: Reporting & Action

Create a training evaluation report summarising findings, trends, and future recommendations.

Share insights with managers or stakeholders to support strategic decisions.

Adjust future training designs based on evidence—not assumptions.

Best practice: Link evaluation data to learner performance reviews or business reports to highlight the value of your intervention.

Conclusion

Training evaluation is no longer optional—it’s essential. For trainers, it means thinking like a learning scientist, a data analyst, and a strategic business partner. By combining established frameworks with real-time data and reflective practice, trainers can deliver sessions that are not only engaging but also measurable, accountable, and transformative.

References

Brinkerhoff, R. O. (2006) Telling Training’s Story: Evaluation Made Simple, Credible, and Effective. San Francisco: Berrett-Koehler.

Brookfield, S. D. (2017) Becoming a Critically Reflective Teacher. 2nd ed. San Francisco: Jossey-Bass.

Chatti, M. A., Dyckhoff, A. L., Schroeder, U. & Thüs, H. (2012) ‘A reference model for learning analytics’, International Journal of Technology Enhanced Learning, 4(5/6), pp. 318–331.

CIPD (2023) Learning and Development 2023: Annual Survey Report. London: Chartered Institute of Personnel and Development.

Kirkpatrick, D. L. & Kirkpatrick, J. D. (2016) Evaluating Training Programs: The Four Levels. 3rd ed. San Francisco: Berrett-Koehler.

Moore, D., Green, J. & Gallis, H. (2015) ‘Achieving Desired Results and Improved Outcomes: Integrating Planning and Assessment Throughout Learning Activities’, Journal of Continuing Education in the Health Professions, 29(1), pp. 1–15.

Phillips, J. J. & Phillips, P. P. (2016) Handbook of Training Evaluation and Measurement Methods. 4th ed. New York: Routledge.

Salas, E., Tannenbaum, S. I., Kraiger, K. & Smith-Jentsch, K. A. (2012) ‘The Science of Training and Development in Organizations: What Matters in Practice’, Psychological Science in the Public Interest, 13(2), pp. 74–101.

Practical Guide for Trainers: Evaluating Your Own Training Courses